Intro

We’ll use

Recovery problem:

Given

Conjecture There are actually lots of versions of the conjecture. This paper will try to unify them.

One such conjecture:

V1: No poly time algorithm for PC recovery if

.

remark — easy quasipoly time algorithm.

Evidence for PC conjecture: It’s hard in some restricted models of computation.

Why it’s nice can prove other problems hard based on it

decision variant:

V2: can’t get good advantage distinguishing between

and

some variants:

- allow any

— they call this the “adversarial ” model - let

. - just have a fixed

What these guys did:

- recovery-to-decision reduction

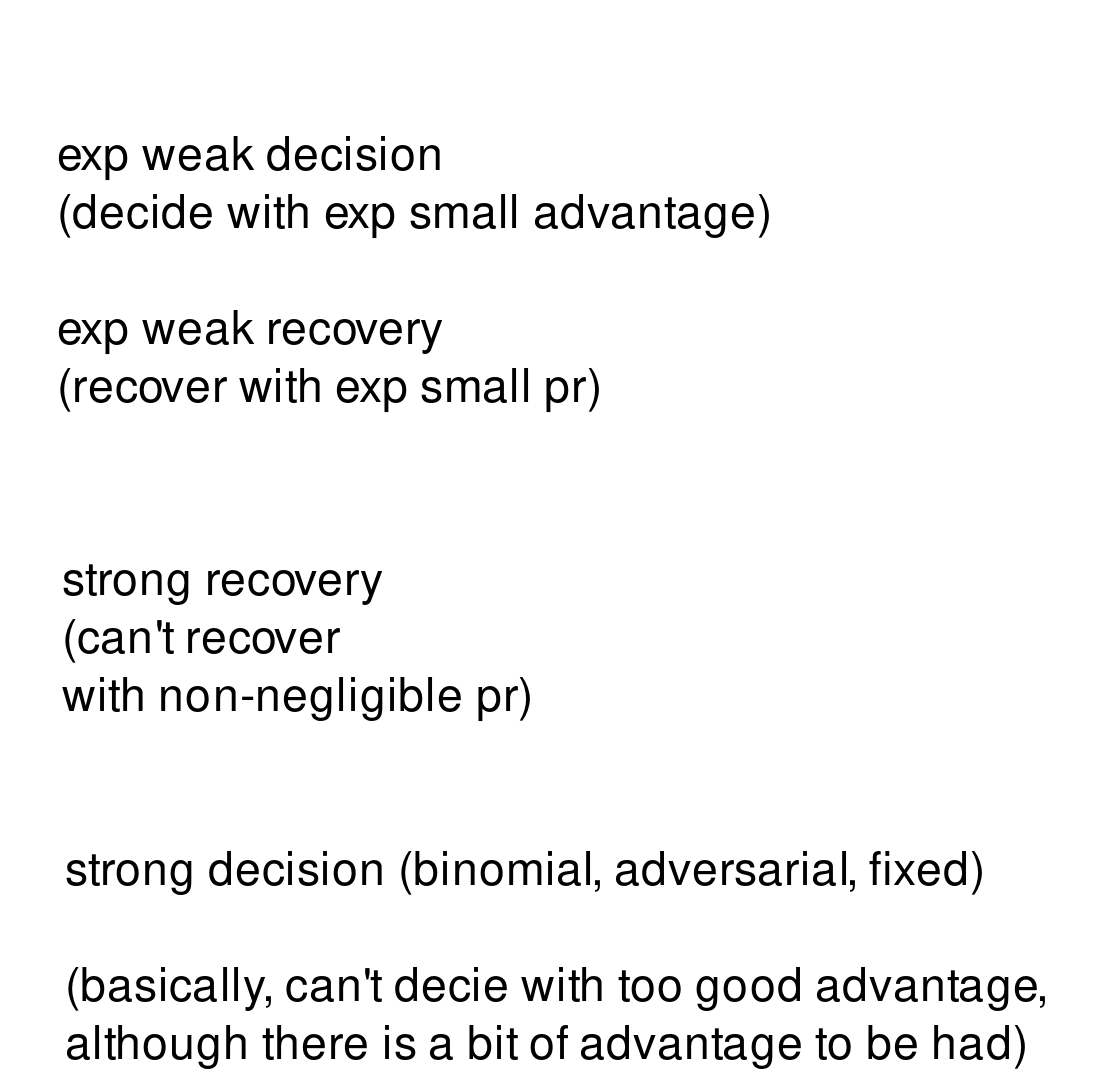

V3 exponentially weak decision conjecture:

poly time alg can’t distinguish between xxx with advantage better than.

(note that this is quite a weak conjecture!)

V4 exponentially weak search conjecture:

there is no poly time alg with success pr better thanfor the recovery problem.

CLAIM:

Suppose that for all

- If the graph is

for some the alg should return TRUE with really good pr , expo small - If the graph is

then alg should return FALSE with really good pr .

Then, we could get a randomized poly time recovery algorithm.

Proof Now the “delete a vertex + its neighborhood” algorithm actually behaves well.

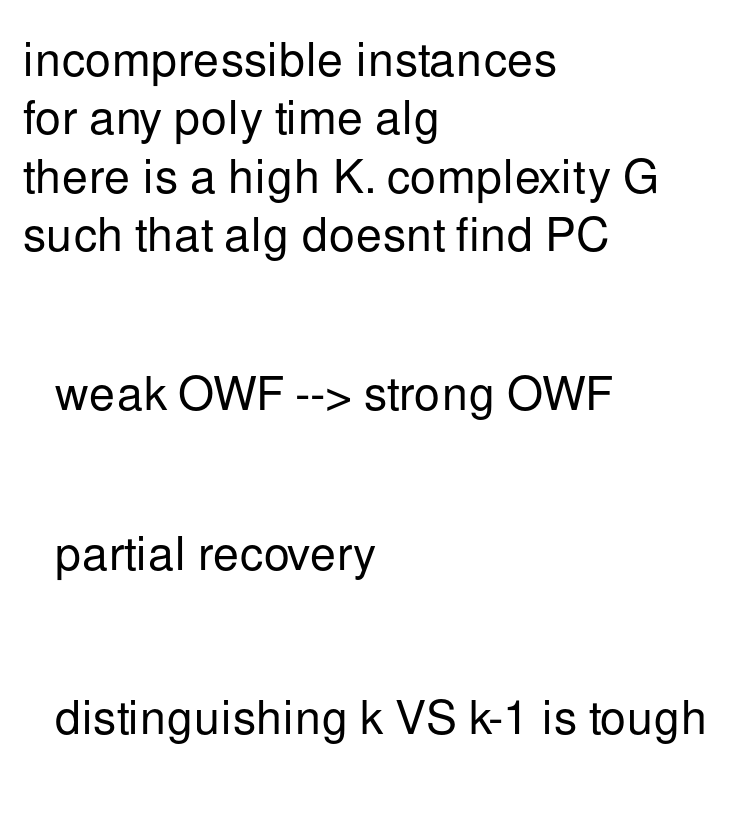

V5 kologomorov complexity!

For any poly time algo, exists some graph of high kolmogorov complexity such that is unlikely to find a -clique in .

NP-hardness of max-clique:\

For any poly time

, exist graph with -clique such that is unlikely to even output an -clique.

(this is true assuming

PC conjecture stronger bc inputs assumed incompressible.

V6 strong recovery

Pr poly time algorithm finds a kclique is negligible

interesting “detection recovery gap”:

- we can detect with non-negligible advantage

- by counting edges

- but can’t recover at all!

V7 strong decision binomial

you can’t get advantage better than

V8 strong decision adversarial

immediate consequence of V7

V9 strong decision fixed

this result has a bit of a gap but it’s basically says that you can’t get good advantage for most

V10

distinguishing betweencan’t be done with good advantage!

V11 Partial recovery

You can’t even partially recover.

They say something about refutation algorithms. Skipping

One way functions

- weak OWFs — inversion pr at most

- strong OWFs — negligible inversion pr

Yao theorem: weak OWF ⇒ strong OWF

unfortunately it blows up security parameter

You can make a OWF based on PC conj:

Corollary If PC OWF is a weak OWF it’s also a strong OWF. and the security parameter doesn’t blow up.

remark:

Core results

aux results

technical overview

Two key ideas:

- shrinking reduction — do something on an induced subgraph

- embedding reduction — plant our guy in a larger graph

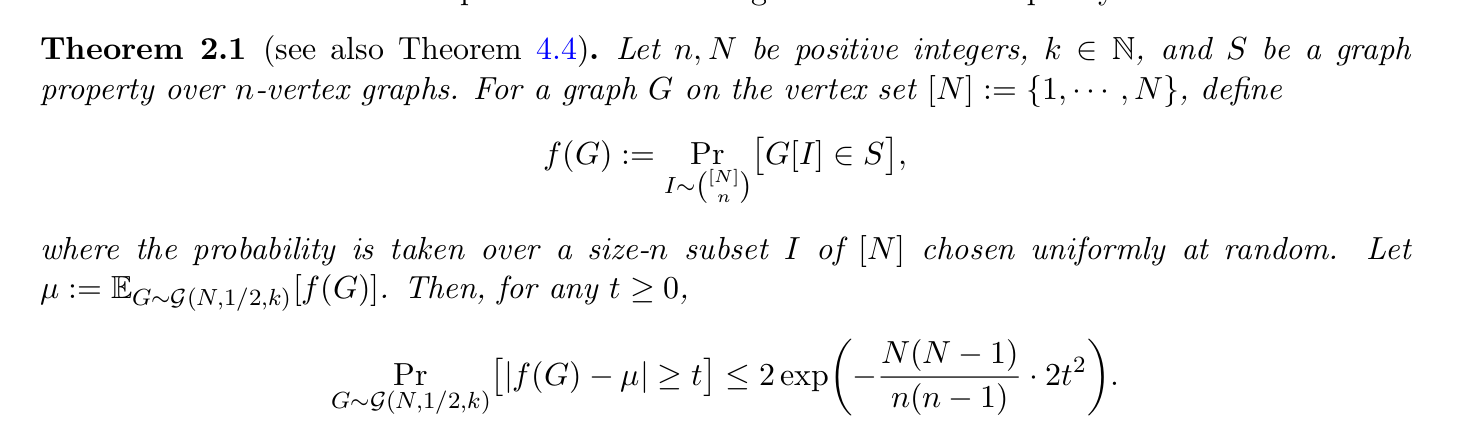

we will use a concentration inequality on pr that a random induced subgraph satisfies some graph property for all graph properties :O

This seems like the kind of thing where you might be able to union bound over all graph properties if you choose

shrinking reduction plan

- we start with alg with slight advantage in binomial-

setting - amplify to exponential advantage in fixed-

setting - then get exp advantage in adversarial-

setting

1 ⇒ 2

wlog, suppose that the alg1 is a graph property.

to amplify the success pr, we run alg1 on random induced subgraphs, and check what fraction of answers we got that were good, vs what fraction we’d expect in

analysis:

let

Next they have some simple trick to show adversarial

vs fixed are the same in low-error regime

embedding reduction plan

- start with recovery alg with

success pr. - amplify to recovery alg with

success pr.